Day 278 of Self Quarantine Covid 19 Deaths in U.S.: 301,000 GA Vote!!

When I first encounter a new environment to observe and I am able to observe with “beginner mind” one of the challenges is to understand where to focus my attention? Many environments are a “target rich” landscape of opportunities to improve the process. The Orient phase is about understanding how to prioritize where to place your attention and focus and then to determine where to begin your potential improvements.

Since my professional background has a heavy dose of technology, I always have to resist the pull of Technology Centered Design. Most technologists leap frog the Observe and Orient phases to jump right into providing a technological solution to some random part of the customer’s work process. The Orient phase aims to make sense of what you have observed and then prioritize where to focus your prototyping efforts. Each of the frameworks in the Orient phase provide a way to quickly triangulate where to start.

This blog post takes a look at a couple of specific examples in different stages of orientation to see how to apply the frameworks.

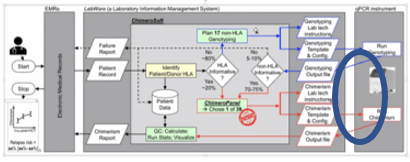

Brandon Fleming is the CEO of an early stage biotech startup doing chimerism tests for patients who had bone marrow transplants. The test is moving from a research phase to an MVP phase. Where manual processes were OK for research they will be too expensive for a V1 product. So Brandon is now analyzing the video ethnography of the OBSERVE phase to the ORIENT phase to figure out where to start software prototyping.

Using the video ethnography, he created the flow of work from the time the test is ordered until the time that the result is sent back to the oncologist. Brandon asked “so these are the steps that we’ve identified in the process. Is there a framework or process to help guide me to where I should start the prototypes for software product development?”

Brandon had read through the first three “Observe, Don’t Ask” blog posts. He shared “There is a lot to absorb in that third post. I know I need to understand it, but it seems overwhelming.”

We both chuckled and realized that we had our topic to explore for our weekly mentoring session over Zoom.

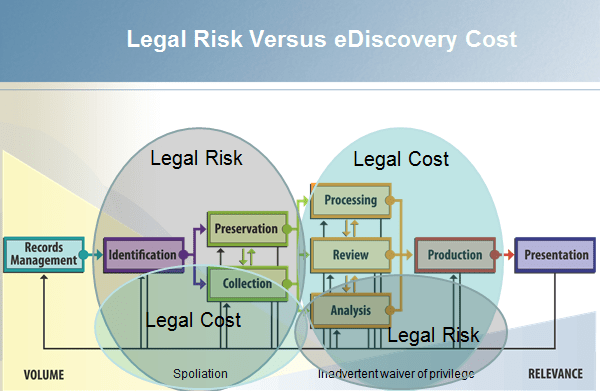

“I think we are going to focus on the where are the costs thinking from the diagram from eDiscovery, but let’s look at the first couple of frameworks.”

We looked at the workflow and realized that almost every step was a hassle and involved the lab tech following a printed step by step process with a lot of retyping from one system to another system. These manual translation steps were tedious and potentially prone to errors. So lots of hassles. We might have stopped here to re-draw the hasslemap, but we kept going.

We looked at the SD Logic Augment sequence and realized that we were moving from the manual (collaborate) to the augment step. We agreed that we were too early in our understanding to examine outsourcing (delegate) or full automation (self-service).

Based on what Brandon had learned during his observation and follow-up interviews, we looked at each step in the workflow in the context of the costs and risks of that step. When we developed Attenex Patterns, we realized that we could provide a valuable product without having to automate every step in the eDiscovery workflow. Further, we wanted to minimize our risks. As we looked at each eDiscovery step, we realized that the four steps in the oval of “Legal Cost” were where we should spend our Augmenting efforts. We could dramatically reduce costs and not incur a lot of legal risk for our clients or our company.

As we worked through the Chimerocyte workflow diagram, we assigned cost estimates for each step for materials, human labor, machine usage and capitalization for the existing process. We then looked at each step from the cost perspective of a software developer to augment that step. We then looked at each step from a risk perspective of the costs of getting something wrong at each step. The two big risks Brandon faced were having something go wrong such that he needed to re-run the test and/or providing wrong information to the oncologist (a false positive or a false negative).

A simple heuristic for prioritizing which step(s) to augment is to find steps that have a high cost per test, a low cost for the software developer in terms of time and complexity, and a low risk.

The steps that met those criteria were the setting up of the lab machine and getting the results back from the lab machine (within the blue oval). Brandon now had his starting point for where to prototype.

They built the prototype and then were able to test it on non-patient samples (a scarce supply). They had their first win. They could put this component into supervised use by the experienced lab technician and the scientist and measure whether they had a positive or negative effect on the per test cost.

They also made a complete loop through the “Observe, Don’t Ask” Cycle.

Brandon and his team are not ready to ship an MVP as they still have several steps that they want to automate like pulling the patient data from the medical record system. However, they are well on their way by using video to observe the workflow and show the developers what actually happens in practice. By having the video, they could also determine costs to execute the test.

Observe, Don’t Ask. Show, Don’t Tell. Prototype, Don’t Guess. Act, Don’t Delay.