A successful product has many parents. No one claims a failed product.

Attenex Patterns was both a successful AND an innovative visual analytics product. The seeds of the success occurred for six months before and after the founding of the company. The creation of the company and the creation of the product represent forty years of lessons and mentoring come to life. This chapter in the Attenex history provides a context and a framework both for what went into the success, and a reflection on what we learned on the wild ride.

Like all good companies, you try to shape and control your story. The public story of how Attenex came into being can be found in the AmLaw Technology article “Seattle Sleuth” published in the Winter of 2003. This article was aimed at promoting Preston Gates and Ellis (now K&L Gates) as much as it helped to promote Attenex.

In the early spring of 2000, Marty Smith (partner at Preston Gates & Ellis) was on his semi-annual visit to Pacific Northwest National Laboratory (PNL) in Richland, WA, which  is operated as a part of Battelle. Marty was at PNL as part of his role with the Washington Software Alliance (now the Washington Technology Alliance). The visits were set up as part of PNL’s efforts to make other Washington software industry professionals aware of their work so that they might make connections to their innovations which might then be commercialized. As Marty sat through six hours of presentations by one group after another, he was enthralled with the SPIRE tool (now IN-SPIRE) and related projects.

is operated as a part of Battelle. Marty was at PNL as part of his role with the Washington Software Alliance (now the Washington Technology Alliance). The visits were set up as part of PNL’s efforts to make other Washington software industry professionals aware of their work so that they might make connections to their innovations which might then be commercialized. As Marty sat through six hours of presentations by one group after another, he was enthralled with the SPIRE tool (now IN-SPIRE) and related projects.

SPIRE was a tool developed for the CIA, NSA, DIA, and FBI to analyze documents and display the documents in an abstract three dimensional space. SPIRE worked on expensive SUN workstations and was essentially a single user system for individual analysts. As Marty was watching the demo, he connected this potential solution to the explosion in costs for electronic discovery for litigation that Microsoft was encountering. He asked whether the same tool could be used to process emails. They said sure and showed him an example.

While Marty was not a litigator, he was on the Preston Gates and Ellis committee that managed the dealings with the Microsoft account and he was well aware of the demands  from Bill Neukom (formerly Microsoft General Counsel) to help stop the exponential increase in the cost of electronic discovery. Martha Dawson (Preston Partner and head of the Document Analysis and Technology Group – DATG) had done an excellent job in continually improving the process used for electronic discovery through creative processes like going to a contract pool of review attorneys instead of using expensive associates and partners to do a review. Yet, everyone was realizing that continuous improvement does not begin to keep up with steeply rising costs due to the exponential rise in the volume of Microsoft email.

from Bill Neukom (formerly Microsoft General Counsel) to help stop the exponential increase in the cost of electronic discovery. Martha Dawson (Preston Partner and head of the Document Analysis and Technology Group – DATG) had done an excellent job in continually improving the process used for electronic discovery through creative processes like going to a contract pool of review attorneys instead of using expensive associates and partners to do a review. Yet, everyone was realizing that continuous improvement does not begin to keep up with steeply rising costs due to the exponential rise in the volume of Microsoft email.

Full of excitement, Marty came back to the litigators (Martha Dawson and David McDonald) and shared his observations about this great tool at Battelle that could really help reduce the costs of electronic discovery. When they asked how, Marty explained how the document analysis and visualization would make it much easier to see which documents were related to each other and then be able to quickly bull doze out the junk. They stared back at him and basically dismissed that it would have any effect on their well honed process. But Marty at least got them to agree to visit PNNL and view a demo of the technology. A month later they did and while impressed with the eye candy, the litigators still didn’t believe that it would help them with their problem.

Knowing the extent of the problem at Microsoft, Marty knew he couldn’t give up and the firm couldn’t afford to lose the revenue if Microsoft decided to move reviews to another law firm or offshore. In addition to his role in the Technology and Intellectual Property (TIP) practice at Preston Gates, Marty was also Chair of the Working Smarter committee. This committee looked for ways that Preston could improve their bottom line through the application of technology. This effort was a result of Gerry Johnson’s initiatives once he became Preston Gates Managing Partner to leave a legacy of innovation behind as his lasting contribution to the firm. So out of this committee’s budget, Marty decided to buy a SUN workstation to test the SPIRE software with Martha Dawson and DATG (now K&L Gates e-DAT Group).

The SUN workstation arrived in August, 2000, and Martha agreed to assign one of her matter leads (Gregory Cody) to do a matter on the SUN that had been previously reviewed. With synchronicity afoot, Marty and I met at a working dinner on Bainbridge Island for the BEST Foundation that our wives were officers of. Marty was my contracts attorney when I was VP of Engineering at Aldus Corporation.

While standing around making small talk, I asked Marty what he was up to these days. In his usual exuberance, he related that he was really excited about the technology projects he was overseeing. “We are doing some really interesting work with information visualization, computational linguistics, natural language processing, knowledge management, data mining, and complex document assembly.”

I laughed and said “I didn’t know there was a single lawyer that could string those terms together, let alone have some understanding of what they mean.” Having just left Primus Knowledge Solutions and in the process of forming my own consulting company, I said that I would be interested in coming by to see what they were up to and get pointers to the Battelle folks so I could go learn more about their tools. We did the perfunctory, “sure let’s keep in touch” and said good night.

The next morning I got a call at 8am from Marty asking me to get my rear end into Preston Gates in Seattle as soon as I could. He had described my skills to Gerry Johnson and told him he thought I would be just the right person to help Preston Gates evaluate the technology. He also said that if the technology worked, Preston would be very interested in forming a company to bring the technology and solution to market. So he wanted to make sure that I looked at the evaluation both from a technology standpoint and from a business formation standpoint.

Within a couple of weeks, Gregory Cody had managed to process a part of a recent matter that DATG had reviewed. The preliminary statistics were amazing. With a tool designed for something else, he was able to get two to three times the productivity versus the current way of looking at things one email at a time in Outlook. He did not miss any documents that had been found with their current method, and he found several responsive documents that were missed by the linear review process. Everyone was blown away at the implications and at the big jump in productivity. With this first test and a tool not designed for this purpose, we’d gotten a 200-300% increase in productivity and better quality in an already innovative environment that had worked very hard to get 10 to 20% productivity improvements each year.

Now that we knew we were on to something the evaluation effort picked up a lot of steam. We brought in four more lawyer reviewers and trained them on the technology and bought a few more SUN workstations. This group went through five more matters of differing degrees of complexity to see if different reviewers on different matters could achieve the same kind of productivity and quality gains. All of the tests were successful.

In parallel, we started negotiating with Battelle to get the changes that were needed to put the system into production and to figure out a business relationship for moving forward. On the technology front, SPIRE needed a lot of work on the importing and exporting side to eliminate several manual steps that the Preston Gates IT people were having to go through. One of the key issues was to develop a way to dedupe the materials being fed into the analysis engine to further reduce the amount of material that the attorneys would have to review.

If you think about the nature of email, there are a lot of duplicate emails within an organization. When I send an email to somebody there is a copy in my outbox and a copy in your inbox. If I send an email to several people, the duplicate email problem gets exponentially larger.

We quickly learned that Battelle was not able to move at the speed of development we needed. We wanted to move at Dot Com speeds while they were used to moving at government speeds or “furlongs per fortnight.” As a result, David McDonald finally got fed up and over a weekend did a visual basic program to dedupe Outlook/Exchange .PST files. This program eventually became something called MiniMe and was put into production by Kim Church’s IT group within a few weeks.

I have to admit that I felt pretty embarrassed that a senior partner with Preston Gates would sit down and write a program to do the deduping. As long as I have been away from coding, that option would never have occurred to me. I marveled at David’s skills to be both a lawyer and a good technologist. A few weeks later I found out a bit more about McDonald. I knew from my previous interactions with him that he was a renowned intellectual property litigator and that he and his litigation partner, Karl Quackenbush, had been the litigators representing Microsoft in many of their high visibility IP cases. What I didn’t know was that David was so bored while he was at Harvard Law School that during his second year of law school he went over to MIT and got a Masters in Computer Science.

While we were getting all of the good news from our testing of SPIRE, on the business front we were getting nowhere. When we started we assumed that we would set up a joint venture with Battelle to pursue the commercialization of the technology. They would do the product development and we would do the sales, marketing and support. As time went by, they proved themselves to be both terrible and slow and not reliable at making their commitments to make the necessary changes to SPIRE that we needed. When I did the code due diligence it became clear that SPIRE was a 10+ year old “spaghetti code” tool that would be very difficult to maintain and support. They were rewriting the code for Windows NT (now called INSPIRE) which looked promising but it was still a single user version and we wanted to be able to have up to 50 attorneys working on the system simultaneously.

It became clear that they had no money to invest in the joint venture; all they could do would be to contribute their technology. The joint venture would have to pay them to continue development of the technology and they would not give the joint venture any rights to the source code. Then we found out that they had licensed the technology to two other spinouts (Cartia Themescape since bought by Aurigin and a biological systems visualization company OmniViz). These spinouts did not have any market restrictions as to what markets they could supply the technology to, so we wouldn’t be able to get any kind of exclusive for the legal market. In short, Battelle was not going to be a very good partner.

In parallel with these business activities I was doing a lot of research on what was publicly available on how to do information visualization and visual analytics. I became convinced that it would be relatively easy to do the necessary document analysis and visualization from the ground up. You needed a lot of computing horse power but the basic algorithms were published on how to get started. Further, it was clear that you needed to take a database approach to the problem so that you could have multiple attorneys reviewing a matter at the same time. I could not convince Battelle that this was a mandatory requirement.

The other fly in the ointment that we encountered was the business model that would let us make money in this environment. We actually spent more time on figuring out a business model than on evaluating the technology. When you have an application of technology that has 10X (10 times) levels of productivity improvements, one of the first things that you destroy is the business model of your customers. Up to this point all of the eDiscovery reviews were done with hourly billing. You counted the number of hours that the reviewers were working, multiplied by their hourly billing rate and sent the bill to the client. Your profits end up being the delta between the billing rate and the labor rate with some overhead thrown in. Yet with this technology even with only a 5X improvement in productivity, you would be cutting both your customers’ top line and bottom line by 80%. We struggled for months to figure out how to solve this dilemma. It is generally not considered good practice to destroy the business model of your customers.

In the end the answer was easy, but it sure took us a long time to see it because the “billable hour” is so wired into the legal profession. We had to move to some form of fixed price model for billing. We eventually hit on dollars per megabyte processed as the way to bill. This also helped the customer budget because once they knew how much data they had in megabytes they would know what their bill was going to be. So Preston looked at their historical billing rates in megabytes now instead of in hours, came up with their current billing rate and what their labor cost would be with the new technology at a 3X overall productivity factor, subtracted the two and then decided to split the difference with the client. Thus, both parties won. The customer got an immediate 30% discount on their billings and Preston got an equivalent uplift in their profits. Further, Preston was now incented to be as efficient as possible in reviewing documents. For every increase in productivity, their would be an equivalent gain in the percent profitability for the law firm.

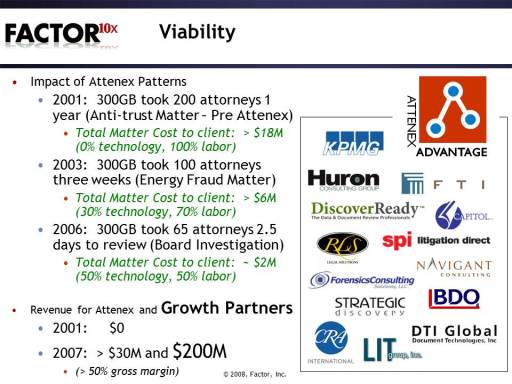

The following diagram shows the progression of how the productivity evolved over time and how Attenex and our channel partners revenue increased:

It was now January of 2001. The next big issue was how to fund and staff what was to become Attenex. From the beginning of my involvement, I had put forth a skeletal business plan that estimated that we would need $10 million in funding to go through the first several phases of product and market development. This business plan also called for traditional Venture Capital funding. The problem was the economy was tanking and the Dot Com Bust was occurring so Venture Capital was drying up for new ventures. In late January though we had a big breakthrough. In working through the business model, we realized that if we could get to even 5X productivity increase, then Preston Gates could afford to fund the company with the excess profits they would make from the DATG business by using our technology – assuming that they continued to increase the amount of electronic discovery business they could generate from their clients. Thus, Preston would have solved both their client’s problem of reducing the cost of electronic discovery as well as creating a new company that could generate additional value over time by selling the products to other law firms.

From a staffing standpoint, we were at a fortunate time. Lots of good software engineers were available as the Dot Com Bust occurred. My first choice for the key software engineer and architect of the products was Dan Gallivan who was at Akamai. I had talked to Dan over the preceding several months to check my assumptions on how easy or difficult it would be to develop something like the capabilities of SPIRE. He thought it was doable but that it would be harder than I thought. I kept feeding him articles that I was coming across and as he began to understand the problem he was coming to the same conclusion I was. However, he was happy at Akamai. Then I got a call one day that Dan had just found out that he and his team would be laid off soon and that they would really like to continue working together as a team. He wanted to know if Preston was serious about forming a company, because if they were, he felt he could bring his core team over to the future Attenex.

I knew that Preston was serious as we had made several business plan presentations to the Executive Committee and to the partners and that they were close to making a decision. All along I was very clear that I had no interest in taking an active management role with the company as I wanted to continue with my own consulting firm and continue teaching graduate school. I also wanted no part of being in a venture funded company. Then when it became a possibility for Preston to fund the company I reluctantly agreed to be the founding CEO until such time as we were big enough for me to go back to focusing full time on the product.

Everything came together the last week of March, 2001. The Preston Gates Executive Committee approved the business plan and the funding for the new company. We filed the articles of incorporation and we made job offers to the team of seven from Akamai. We located some space next to the DATG group on the 14th floor of the Bank of America building and we opened our doors on Monday, April 1, 2001. We had no desks, no computers, no office supplies, nothing. However, we did have a great starting team consisting of:

- Skip Walter

- Dan Gallivan

- Eric Robinson

- Lynne Evans Koyamatsu

- Kenji Kawai

- Holly Jameson Carr

- Tony Krebs

- Tamara Adlin

We had plenty of flip chart paper and pens and we started designing the business, the organization, and the products. By the end of the week, we had computers for everyone along with the desks and basics to start actually producing something. We also had a name – Newco Inc. None of us could believe that this name wasn’t taken when we went to file, but there it was. We grabbed it. It would have to do for a while until we could get a marketing type in to figure out a name for what we were up to.

Formation of Newco – April 2001

While we were working the business plan side to form the company, DATG was putting the MiniMe deduping tool and the SPIRE application into production to do electronic discovery. DATG was getting hit with an increasing volume of discovery and the combination of these two tools was helping stem the tide. We knew it would take us awhile to have something that would go beyond SPIRE, so the first order of business was to clean up, make more robust and improve the performance of MiniMe. So the engineering team replaced MiniMe with some serious Java code and within two months had the tool up and running and in production. Our first product component, Redundancy Suppression Tool (RST) achieved our goal of outperforming MiniMe by a factor of 10. Every one was quite impressed and quickly saw the benefit of hiring experienced software engineers.

During this hectic start up period, we ran into our first set of intellectual property and ethics challenges. During the due diligence evaluation of SPIRE and Battelle, I had looked cursorily at the SPIRE code and had the Battelle folks give me general descriptions of their approach to analyzing and visualizing document collections. Our attorney friends made it clear that we could not take any chances in imparting any of that knowledge to the engineers. So I had to stay clear of the design process for Patterns and we had to make sure that none of the engineers ever saw the SPIRE application being used by DATG. All I could tell the team was that we knew that the visualization of documents would dramatically increase the productivity of doing electronic review and that we needed a system that could handle multiple reviewers working on the same matter at the same time. Thus the team would need to take a databased approach rather than the sequential file, in memory system, that was the basis of SPIRE. While everyone was frustrated with these arrangements, the setting up of the Chinese wall was a great insurance policy against any threat of Intellectual Property violations.

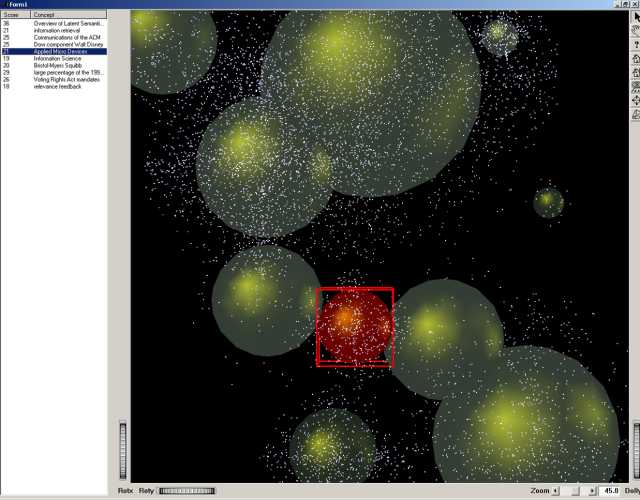

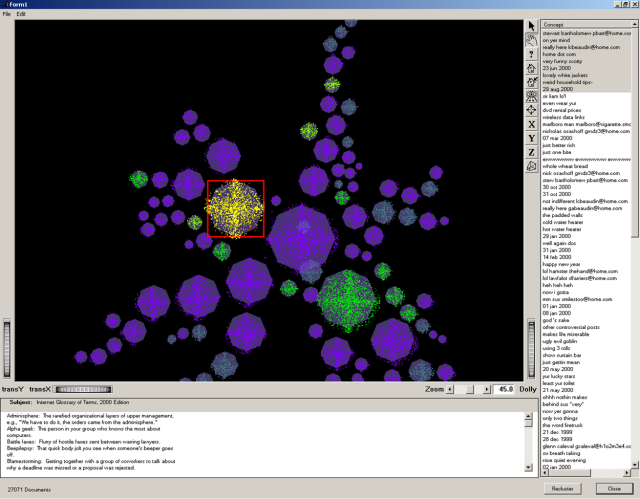

By late May, the team developed their first prototype of our document visualization tool, code named Haystack, as in finding needles within a haystack. Certainly, the quick development of RST had impressed everyone but there wasn’t much to see. With Haystack we had our first visualization and UI prototype. The following diagram illustrates what seems so crude now:

The Preston partners closely involved with funding us were most impressed. Yet, during the demo a light bulb started to go on for our Preston Gates funders: “If you could develop this so quickly, do we really have sustainable IP? Can’t others develop the same thing as quickly as you just did?” The following email captures the dialog with the Chairman of the Attenex Board, Gerry Johnson, explaining software innovation ups and downs:

—–Original Message—– From: Skip Walter [mailto:skip@newco.prestongates.com] Sent: Wednesday, May 23, 2001 11:08 AM To: marty@prestongates.com; marthad@prestongates.com; davidm@prestongates.com; marywi@prestongates.com Cc: Dan Gallivan; gerryj@prestongates.com Subject: Haystack Visualization DemoAs part of my weekly meeting with Gerry I am going to show him a demo of the visualization prototype that we’ve been working on codenamed haystack. The demo with Gerry is somewhere between 1 and 1:30pm. If you get a chance, join us then or come down later this afternoon. We’ll keep a copy of the demo available if today doesn’t work out so that we can show you later at your convenience.

The operative word here is that it is a prototype and is subject to all the unreliability aspects of early code.

We’re real happy with the way the architecture has turned out. We’re disappointed with the visualization layer as the graphics package we used for prototyping (AWT) was pretty inappropriate for the task. The other layers are working great and we can demonstrate the loading of Outlook/Exchange .PSTs into the SQL database, analyzing of those files, frequency calculations, clustering, orienting, rendering the clusters to the screen, and then some level of manipulation.

We’ve learned a lot in an incredibly short time and it appears that most of the architectural decisions that the team made worked out well. We’re pretty happy with the early results from the compute intensive tasks of analysis, clustering and orientation.

Now that we’ve got a baseline we can start the user testing for the UI.

We’ll also show a quick prototype that we’ve done in Excel that looks at the direct manipulation of the key concepts which is leading us to believe that a mixed mode interface of the detail text and the visualization of the whole may be a better way to go.

I am simply in awe of what the team has done in less than three weeks of working the problem. As we shift from technology centric design and work with the HCD team we should get to a very usable tool very quickly.

Look forward to seeing you soon.

Skip

—–Original Message—– From: Johnson, Gerry (SEA) [mailto:gerryj@prestongates.com] Sent: Wednesday, May 23, 2001 2:18 PM To: Skip Walter Subject: RE: Haystack Visualization Demothanks for the show Skip – sorry for the dumb questions

—–Original Message—– From: Skip Walter [mailto:skip@newco.prestongates.com] Sent: Wednesday, May 23, 2001 2:28 PM To: Johnson, Gerry (SEA) Subject: RE: Haystack Visualization DemoThere are no dumb questions in this realm. What was impressive is how quickly you and David saw the potential of the concept frequency map. I expect David to jump on those concepts because of his closeness to the problem. The treat is when you find something that is pretty quickly understood by those who don’t spend all day close to this kind of problem. From a software development standpoint, that’s when you get really excited. So to see you “get it” so quickly and then start to ask great questions about how and where it could be used was wonderful.

Thanks again for your trust and support. We’re racing to get this stuff into Martha’s hands to start making a real difference.

—–Original Message—– From: Skip Walter [mailto:skip@newco.prestongates.com] Sent: Wednesday, May 23, 2001 5:51 PM To: Johnson, Gerry (SEA) Subject: RE: Haystack Visualization DemoOne of the questions you asked today was whether this was a hard problem that we are working on. I gave you a quick answer. Let me give you a little more reflective answer.

My assumption as to the intent of the question is “if we can get this far in 2-3 weeks then will others be able to replicate what we are doing in a relatively short amount of time?”

My answer gets at the paradox of software development and in many ways the paradox of any creative insight. Over the centuries many things have seemed impossible until someone has the creative moment when an “Ah hah” shows up. Once they reduce the idea to practice and show it to others, everyone goes “of course, why did we think the problem was so hard.”

What previously took a lot of work now becomes relatively easy to copy.

On one level, visualization of the magnitude that we are talking about is a very tough problem to crack. I’ve been interested in the technology and its applications for well over 30 years since I first became exposed to EKG and EEG processing on a PDP-12 minicomputer with a graphics display. Lots of great researchers and minds have tried to figure out how to do interactive visualizations that actually have some real world payback. Good results have been few and far between in the white collar or professional office productivity arena.

As you can see by the many layers that we had to implement to get to the first level of a visualization tool, this is a problem that is difficult in many areas:

- How do you make meaningful semantics out of a single document and a document corpus?

- How do you relate documents to one another?

- How do you display the results in such a way that you can see the whole and view the details?

- How do you manipulate the display to achieve some domain specific result?

Even these four things have not been possible on their own until very recently, let alone be able to work together to produce what is needed for visualization. In many ways the problem is similar to what Peter Senge describes about the development of the commercial aviation industry in his book The Fifth Discipline: The Art and Practice of the Learning Organization:

“The DC-3 brought together, for the first time, five critical component technologies that formed a successful ensemble. They were: the variable-pitch propeller, retractable landing gear, a type of light-weight molded body construction called “monocoque”, radial air-cooled engine, and wing flaps. To succeed, the DC-3 needed all five; four were not enough. One year earlier, the Boeing 247 was introduced with all of them except wing flaps. Lacking wing flaps, Boeing’s engineers found that the plane was unstable on take-off and landing and had to downsize the engine.”

There are two things at least that are the key to our being able to race as fast as we are:

- We have a real problem that is suitable to the technology. For this we owe Marty Smith for his insight of connecting visualization to electronic discovery when he went to visit Battelle a year ago. Then Martha and David and their crew picked up on the insight to show that it really did work.

- The research on the component technologies (document semantics, computable clustering algorithms, and rendering software/hardware) is developing just as we are getting the cheap computing cycles, large screen displays, fast networks, and very large storage devices.

Thirty years ago when I was working on this problem, we had 8,000 words of memory (versus 512 megabyte personal computers today) on a computer that was 1/10,000 the speed of today’s computers. Even five years ago there wasn’t enough computing power on a supercomputer to do what we showed you today on our desktop.

So at one level, the problem is quite difficult when you look at all the things that had to be solved before we could even start our development activity. On the other hand, now that they are basically solved and we show people our tool then other people can more easily replicate it.

The other thing that comes into play is whether other people will be motivated to copy what we are doing. There are “products” in the world that for one reason or another people just don’t copy. Disney has shown people how to build a successful theme park for over thirty years, yet no other theme parks come close to the Disney experience. At a much smaller level, Primus Knowledge Solutions has shown how to build a successful knowledge management product but nobody has decided to go after that market yet.

On the other hand, disk drive manufacturers rapidly copy innovations and technology from other manufacturers (see Clayton Christensen’s The Innovator’s Dilemma). I haven’t been able to find a pattern to this range of competitive motivation or lack thereof in my own research or other studies.

On a more myopic note, last September when I started on this project my initial assumption was that the visualization was a very hard problem.

Largely because I’ve been interested in it for 30 years and I haven’t seen anyone be commercially successful. Then we saw a couple of good research projects with our Battelle friends in SPIRE and Starlight. At first blush, both looked worthy of being the result of very bright people working on the problem for a very long time. But for different reasons in each case, it became clear that if you started today with the advances in the above component technologies and research, then the problem was a lot easier to solve. It appeared that you could replicate what they were doing in a matter of months.

Four months ago when I first talked to Dan about the opportunity and the visualization module and how long he thought it would take him to develop a tool, his answer was several person years. I kept after him and kept pointing him to research papers and nothing was denting his estimates. Then, something clicked once he saw the quality of the linguistic analysis packages like those from Inxight. He realized the problem was more solvable than he previously thought. His estimates to get to a prototype came down to person months. Then, once he started on it, he realized that between the algorithms described in the research papers, Inxight’s LinguistX, the capability of the Microsoft SQL database, and the experience of the different team members that the prototype could be done in person weeks. Even though Dan is a very experienced computer architect and graphics expert it still took him over four months to see that it was a matter of integrating ensemble technologies rather than having to invent all of the pieces first.

Lastly, as I’ve tried to convey several times, getting to a prototype, and even getting the tool into production is lots less time consuming then all the things it takes to come up with a generalizable product.

Yet, what excites us is that we now have more than enough of a working architecture to start doing quality design and usability prototype iterations with the target users and be able to turn those prototypes around quickly. We have something that we can credibly show to others (like KPMG) that is all ours.

What I can’t answer is what others will do once they see what we’ve done as we put the product to use in other law firms and clients. It probably comes down to economics. Nobody will do much until it appears that there is a $100 million market.

Which brings me to the last example, how we came to understand the economics and business model of Adobe and Photoshop. When we did the merger between Aldus and Adobe, we couldn’t wait to get to the point in the process where both sides shared their detailed financials. The jaw dropping surprise for us at Aldus was the size of the Photoshop revenue stream. The most optimistic market analyst pegged the total size for photo editing software at that time (1993) at $15 million per year.

Given that Aldus had $5 million of that market with our product Photostyler we felt we were in pretty good competitive shape. Imagine our competitive embarrassment when we found out that Photoshop revenues were greater than $150 million per year. Nobody knew. That allowed Adobe to keep the market for so long because nobody else thought it was a very big market.

At this point what I am trusting is that we are at the confluence of the deep expertise that Martha Dawson and her team have built, along with the deep expertise that Dan and his team have, along with the continued increase in computing performance from software and hardware advances. By being first in this arena given the above combination, I believe that we will be OK. Staying first requires us to execute a marketing and sales operation as well as we are executing the product development task along with having a comprehensive vision for where this stuff goes.

Also, the lesson that I’ve learned from the association with the Institute of Design is the importance of having not just one innovation but a system of innovations. Somebody may copy one or two of the things you do, but they can’t begin to copy the system. A key part of my deciding that Attenex was the place to invest my time and talent was the wealth of ideas that Preston Gates has as a result of the Working Smarter initiative, along with my working relationship with Dan Gallivan and our ability to quickly generate a system of innovations.

What you saw this afternoon represents a very small portion of what we are crafting for a suite of innovations and products. In much the same way that Mitch Kapor saw that Visicalc needed graphics and text (Lotus 1-2-3) in order to become an order of magnitude larger business and then Microsoft trumped their efforts by combining Excel with Word and Powerpoint to create an even larger business, I believe that between Preston Gates and Attenex we can articulate and build our equivalent of Microsoft Office. We will not get stuck like the Visicalc folks in thinking that their innovation would last forever as a viable business.

The above are some extended thoughts. They keep me optimistic that we are on the right track and can succeed, but they by no means keep me complacent.

Thanks again for coming down this afternoon and seeing a snapshot of the progress we are making.

From: Johnson, Gerry (SEA) [gerryj@prestongates.com] Sent: Wednesday, May 23, 2001 8:27 PM To: Skip Walter Cc: +Executive Committee (FIRM); Smith, Martin F. (SEA) Subject: RE: Haystack Visualization DemoSkip – thanks very much for this thorough and thoughtful response. In its face, a substantive response defeats me other than to say that I had some insight into your eventual answer here, but couldn’t be more pleased to have this complete an explanation. Thanks again. I’m sharing this with our management committee and Marty. G

While the demo was very impressive, we still had a long way to go.

As mentioned above, most of our development work to date was from a technology centered design viewpoint. In parallel, the human centered design team was observing Martha Dawson’s DATG group to understand the overall workflow as well as the work of the individual document reviewers.

The overall HCD process we followed is:

We rapidly iterated between the User Research and Prototyping phases.

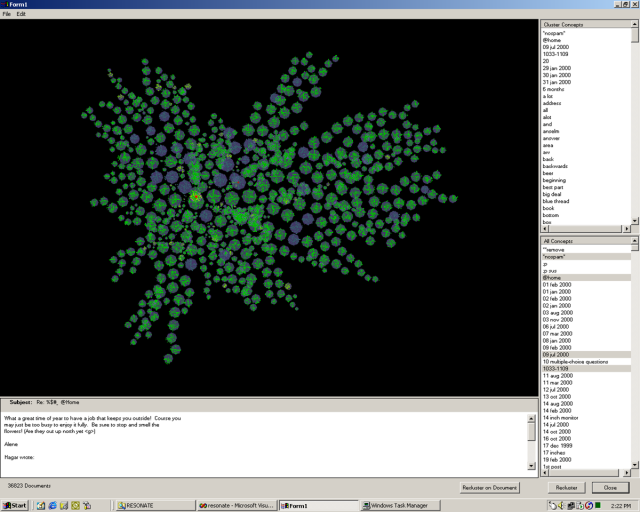

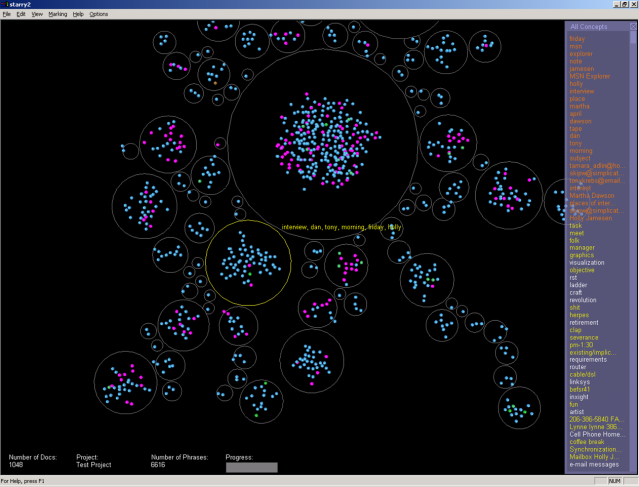

The underlying graphics package we were trying to use did not scale nor did the algorithms that we were trying to employ. We were struggling to get good performance on hundreds of documents while we knew that we had to display 10s of thousands to millions of documents. So we switched from Java to C++ to get the best machine performance and decided to use OpenGL for our graphics standard so that we could do 2D and 3D information displays. The following slides illustrate our progress in analyzing and displaying documents.

As part of our technology centered design focus, we believed that our ultimate visualization would need to be in 3 dimensions. We got very early indications that the lawyer reviewers were very uncomfortable with navigating and understanding a 3D abstract conceptual space. However, as good technologists we figured that we could overcome this problem. As it turns out, we never did.

We used a variety of tools to prototype 3D clustering. The following screen shot looks at one of the prototypes we did in OpenGL to do quick interaction studies with potential users. Just slightly more sophisticated than a paper prototype.

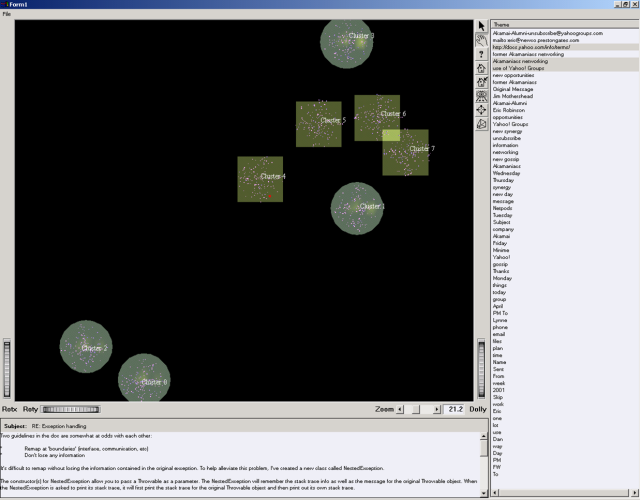

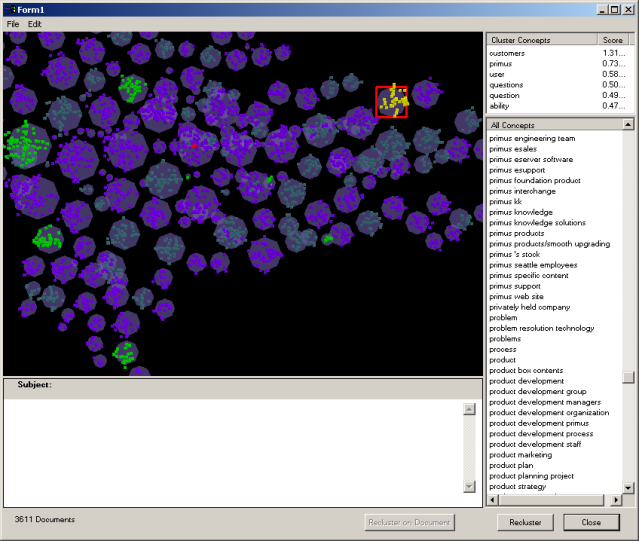

Now that we had some document processing going on, we could exploit different panes within the 3D interface to show different types of clusters in the overall document space along with a concept pane on the right and a mail message viewing pane on the bottom. The core components of the user interface were starting to show up.

With the basic components prototyped, it was time to experiment with large document collections, different styles of clustering, and what kinds of concept extraction we wanted.

Over the course of a month, we tried more than 20 different types of concept displays but none were really helping potential users make sense of the information displayed.

Now that we had basic capabilities it was time to experiment with what kinds of user interactions were required. We explored the different parts of the user interface to see which components should be actionable and what should happen when we clicked on a component. Little did we know that for the next six years we would constantly have to tweak what it mean to do hit highlighting as we added or changed functionality. The more information you display, the clearer you have to be about what is activated.

An important value that Preston Gates brought to the development process was to bring technology industry luminaries by to get demonstrations of what we were up to. One of the most fun demos that we did was for William H. Gates, Sr (yes, that is Bill Gates dad and one of the named partners for Preston Gates & Ellis). Gates, Sr. would usually come by Preston Gates in the summer to address the summer associates about his views of what it means to be a lawyer. For this summer visit, Gerry Johnson persuaded Gates, Sr to come by and see that a law firm could fund innovative software development. Gerry also wanted to give Gates, Sr. visibility into how large the eDiscovery problem was growing for Microsoft since Preston Gates did most of Microsoft’s eDiscovery work.

We prepared more extensively than usual for this demo. By the time Gates, Sr., arrived to see the demo he was clearly quite tired. I was concerned that since we were running late we would put him to sleep in a darkened room. So I shortened by introductory slides and got right to the demo. We showed the current state of the demo:

Just at the point that I thought I had put Gates to sleep, he straightened up and looked at me and said “So how many lawyers does it take to annotate a given document and the collection of documents with all those concepts?”

I replied “No lawyers at all. Our content analytics software is able to figure out all the meaningful concepts to each collection of documents. Everything you are seeing was done automatically.”

He looked at me again like I hadn’t understood the question, “No. Really. How many lawyers did it take to mark up these concepts?”

I repeated “None.”

Bill Gates, Sr., then turned to Gerry Johnson and said “Gerry. Really. How many lawyers does it take to identify these concepts?”

Gerry answered “None.”

As the implications of what we’d just demonstrated dawned on him, he asked “Has anybody demoed this to my son Bill, yet?”

Nervously, we all answered that we had not demoed it to anyone at Microsoft yet.

Gates then almost shouted “Well, will you quickly go over and demonstrate this to him so he’ll quit writing those stupid emails that get him in all that trouble with the Justice Department?”

After we stopped convulsing in laughter, we went on with the demo. Clearly, he understood the implications.

Until this point, we used proximity as the orienting principle both for clusters in the larger space and for documents within a cluster. Proximity implied that documents or clusters that were close together were more related than documents or clusters that were far apart. While proximity worked at the larger view, it did not work well at the cluster level. Proximity gave some information, but didn’t really give you a sense of how the documents or clusters were related. This screen shot shows our first prototype for orienting documents within a cluster.

At the same time that we were working on clusters and orientation, we started working on both color and transparency. Our users needed some way to distinguish the markings on a document (responsive, non-responsive, privileged …). We wanted the transparency capability so we could show more information on smaller screens by having overlay areas (and it also looked cool). We quickly found that we had to worry about human factors issues like color schemes for those with different forms of color blindness.

As we got the end of August, all of the many prototypes started coming together into a coherent whole. We could content analyze the documents, store them in a database, display the documents in clusters and spines, color code the documents, show the key concepts, and display a document in a viewer.

Once we had gotten this far, I could finally see a 30 year dream come true. I wrote this memo to Attenex employees and to our Preston Gates partners at the end of August, 2001.

Email Message from Skip to Attenex Staff: August 31, 2001

In life there are little things and big things. In the context of business, August 15, 2001, was a “big thing” day for me.

In 1968 I was fortunate to get a job in a psychophysiology research lab at Duke Medical Center at the start of my sophomore year in college. We ran experiments on human subjects looking at their physiological responses to behavior modification therapies and to different psychiatric drugs. To better deal with experimental control and real time data analysis of EEGs and EKGs, we purchased a Digital Equipment PDP-12 (the big green machine). It had a mammoth 8000 bytes of memory and two pathetic tape drives that held 256,000 bytes of storage.

In 1968 I was fortunate to get a job in a psychophysiology research lab at Duke Medical Center at the start of my sophomore year in college. We ran experiments on human subjects looking at their physiological responses to behavior modification therapies and to different psychiatric drugs. To better deal with experimental control and real time data analysis of EEGs and EKGs, we purchased a Digital Equipment PDP-12 (the big green machine). It had a mammoth 8000 bytes of memory and two pathetic tape drives that held 256,000 bytes of storage.

Embedded in the rack of the computer was a big green CRT which could display wave forms as well as text. A simple teletype device served as the keyboard. While we were controlling the experiments, we displayed in real time the wave forms from the physiological data of the human subjects. We experimented with multi-dimensional displays of EKG vs EEG vs the user task analysis. It was so fun to get lost in “data space.” [A former HCDE student, Denise Bale calls this “dating her data”.]

Along with doing all the programming for the lab experiments, I got to use the machine to play my first computer game (Spacewar). It was so cool being able to control a space ship in the solar system and have it affected by the gravity of the planets on the CRT. There was no mouse at that time, but we used several potentiometers and toggle switches to control the X, Y and Z coordinates along with the firing of guns. Controlling green phosphor objects was a real feat for those of us who have no hand eye coordination.

One semester while procrastinating in writing several term papers, I wrote a text formatting application called Text12 which was modeled on Text360 for the large IBM mainframes of the time. The formatting commands were eerily familiar to the HTML format that we know today. The results of the activity were that I could enter and edit the text of my papers and then print them out on a letter quality device. It eliminated all the messiness of using a manual type writer and white out. Several times at 2am in the morning I hallucinated about the combination of Spacewar, Complex Wave Form Pattern Detection and Text12 to provide the ability to take the electronic texts that I was creating, analyze them and display them in three dimensional spaces by the relatedness of the concepts within the papers. I got carried away thinking of a new document being indexed and “blasting” links throughout the galaxy of documents. I could almost feel the gravitational attraction of the important documents.

Over the next 10 years as computer processing power grew from the PDP-12 to the PDP-11 to the DEC VAX computers (wow 4 megabytes of virtual memory space for a program and 60 megabyte hard disks), I would periodically do a midnight coding project to try and bring my hallucinations from 1968 into reality. Nice idea but there was never enough algorithms, CPU power, or memory. And there were precious few electronic text sources available to actually index unless I wanted to type them in myself.

As I became a manager and began to acquire research budgets, I would squirrel away a little money each year to see if the technology was ready to tackle the vision. The technology was never ready and there was relatively little research into the indexing and display of document collections until the early 1990s. The other side of the coin was that there was no clear idea of the business value of such a tool. We’d use these prototypes to try and impress internal funders to create some larger research projects. But nobody ever funded us beyond the prototypes.

During this time I hooked up with Russ Ackoff of the Wharton School at the University of Pennsylvania. One of the many “idealized designs” that he worked on was a distributed National Library System that he published a book about. This design called for all the texts to be in electronic format and available for searching. A key feature of the system was to generate “Invisible Universities”. That is, using the reference lists of published papers and books, find out who references whom. This system could then create influence diagrams of idea evolutions. I was really hooked then on the possibilities.

One of the many reasons I joined Primus a couple of years ago was to bring this vision to reality using the Primus Knowledge Engine as a foundation. We even licensed the Inxight ThingFinder software to help us do the indexing we needed to automatically author “solutions” for our knowledge base. We got started but it became clear that we had no visualization talent within the engineering department and no clear idea of the business driver for such a technology.

Which brings us to Preston Gates and Ellis (now K&L Gates) and Attenex. Thanks to Marty Smith who connected this semantic indexing and visualization with the electronic discovery problem we now had the baseline tool to see the dream come true. Thanks to the efforts of Eric, last week we were able to connect the indexing capabilities of Microsoft tools so that we could inhale MS Office documents into the document analysis tool and generate concepts from Word, Powerpoint, Excel, HTML, and Adobe PDF documents. Then, we were able to load an Attenex Patterns Document Mapper database with my research papers from the last several years about customer profiles, document visualization and knowledge management.

Then Kenji and Dan figured out how to cluster long documents and normalize the frequencies of the concepts. And Lynne added the final layer of being able to add a document viewing window for the multiple formats along with cleaning up the interaction with the concept window panes on the right side of the Patterns display.

At 5PM yesterday, I saw my 30 year dream come alive. I was able to display my research papers. I navigated around the clusters and the concepts. And then when I selected on a document, whether it was MS Word or a PDF, up it would pop in its own document viewer. Unbelievable. The only thing missing is the ability to index the books that I have in my home library.

But synchronicity strikes again. Just this week, Amazon.com started selling electronic versions of the popular management texts that are a core part of my library. They come in either Microsoft reader or Adobe eBook format. I quickly bought ebooks in each of the formats to see if we could index them. Of course they are protected from that. So close, so far. But then it occurs to me, books are intellectual property. I bet that someone in the Intellectual Property Practice at K&L Gates was involved in negotiating the licenses for some of the book properties. Sure enough several folks in the group were. So hopefully the last step in the journey of the dream is close at hand, the ability to not only pour my own writings and email, research reports, and published papers into the Attenex Patterns document database, but we can also get full length books indexed.

Now I will be able to SEE the idea and concept relationships between all these wonderful publications that I can only fuzzily keep in my human memory today. I can’t wait to glean new insights as I index more documents and as I use the re-cluster on anchor documents to see relationships I’ve never been able to see before. I look forward to being able to publish meta-data about a corpus of documents and open up a whole new field of Document Mining.

As a researcher, teacher, and business person, yesterday was the happiest day of my professional life. My heartfelt thanks to all of you who’ve helped bring these concepts to life.

Skip

Well, we were really cooking now.

In the last year (six months before company formation and six months after formation), we’d gone through the first three phases of the HCD process – user research, prototypes (paper, behavioral, and appearance) and value (monetization and supporting human values). Now it was time to turn to the other 90% of software development – user experience and turning a prototype into a product.

Pingback: A Funny Thing Happened in the Tasting Room | On the Way to Somewhere Else

Pingback: Integrity – Easily Lost, Hard to Regain | On the Way to Somewhere Else

Pingback: Observing Users for Software Development | On the Way to Somewhere Else

Pingback: Who’s Watching the Scientists? | On the Way to Somewhere Else

Pingback: Advice to a Non-Technical CEO of a Software Startup | On the Way to Somewhere Else

Pingback: Visual Search – Please, I’m begging you! | On the Way to Somewhere Else

Pingback: Software Practice – A PaineFull Discussion | On the Way to Somewhere Else

Pingback: The Art called ‘Movie Making’ | David Socha's Blog

Pingback: Day 1 – Creating My Future | On the Way to Somewhere Else

Pingback: When Science and Art Dance – Business Results | On the Way to Somewhere Else

Pingback: Graduate Students – Best Knowledge Transfer System | On the Way to Somewhere Else

Pingback: A little eDiscovery Humor | On the Way to Somewhere Else

Pingback: Review of Empirical Research Through Design | On the Way to Somewhere Else

Pingback: Daydreaming of Kindle Social Highlights | On the Way to Somewhere Else

Pingback: Rand Fishkin on Network Effects for raising capital | On the Way to Somewhere Else

Pingback: Whiteboarding: Designing a software team | On the Way to Somewhere Else

Pingback: The Lost Art of Selling B2B – the $1B meeting | On the Way to Somewhere Else

Pingback: UX with BlinkUX -

Pingback: What if We Could KnowNow -